Over the past few decades, classical computing has transformed the way we live and work. It has enabled the emergence of the Internet and digital services that have reshaped human interactions, commerce, and education.

In 2023, the combined power of global data centers reached 100 zettaflops (100 million billion operations per second), supporting billions of users connected every day.

But to better understand how computing has become a driving force in our modern societies, it is essential to revisit the origins of this transformation.

The beginnings of the computing era

The creation of the first computers, such as the ENIAC (Electronic Numerical Integrator and Computer) in 1946, marked the start of a new technological era. These machines, though rudimentary by today’s standards, enabled complex calculations that were previously beyond the reach of the human mind or mechanical tools. John von Neumann, one of the pioneers of modern computing, developed the fundamental architecture that remains at the core of contemporary computers.

In the following decades, progress was exponential. Transistors, introduced in 1947, replaced vacuum tubes, making computers smaller, faster, and more reliable. In the 1970s, microprocessors gave birth to personal computing, integrating all essential processor functions onto a single silicon chip. This miniaturization made computers accessible to a broader audience.

However, this impressive evolution is now reaching its limits. The classical physical laws governing transistors no longer allow for indefinite increases in computing power. It is in this context that quantum computing emerges as a new frontier.

Quantum computing: a revolution in the making

Quantum computing finds its roots in the 1980s when Richard Feynman and David Deutsch laid the theoretical groundwork for machines exploiting the principles of quantum mechanics. These ideas were reinforced in 1994 with Shor’s algorithm, which demonstrated the theoretical ability of quantum computers to factor large numbers exponentially faster than classical computers, highlighting their potential in cryptography.

The first prototypes of quantum computers appeared in the 2000s, thanks to the combined efforts of academic institutions and companies like IBM, Google, and D-Wave. In 2019, Google even claimed to have achieved “quantum supremacy” with its Sycamore processor, capable of performing in 200 seconds a calculation that a classical supercomputer would take 10,000 years to complete.

How quantum computers work

Unlike classical computers, which use binary bits (0 or 1), quantum computers use qubits, or quantum bits. Thanks to the principle of quantum superposition, qubits can simultaneously represent the states 0, 1, or a combination of both. This property allows them to process multiple states in parallel.

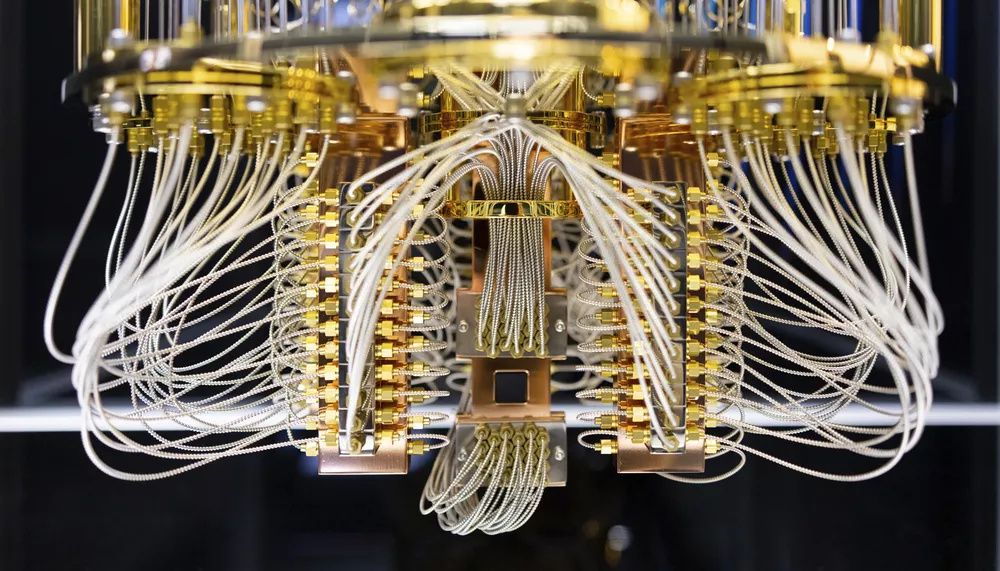

Generally, qubits are created by manipulating and measuring quantum particles (the smallest known building blocks of the physical universe), such as photons, electrons, or atoms. To manipulate these particles, qubits must be kept extremely cold to minimize noise and prevent them from producing inaccurate results or errors caused by unintentional decoherence.

When solving a complex problem, such as factoring a large number, traditional bits are hindered by the vast amounts of information they contain. Since qubits can maintain a superposition, a quantum computer using qubits can approach the problem differently from classical computers.

Imagine needing to find the combination to a multi-digit safe without knowing the code. With a classical computer, we would be forced to try each combination one by one until finding the correct one—a process that would become extremely long if the number of digits were high. Each attempt would be like a bit recording whether a combination works or not.

In contrast, a quantum computer approaches this problem differently: qubits, thanks to their ability to superpose, can simultaneously represent all possible combinations. Instead of testing each combination separately, the quantum computer can use specific algorithms (like Shor’s algorithm) to amplify the probabilities of correct solutions and minimize those of incorrect ones.

The process is comparable to light passing through a prism. Initially, white light contains all colors (or all possible solutions). As it passes through the prism (here, the quantum algorithm), only certain colors are amplified and become visible (the correct solutions), while others cancel out. Thus, the quantum computer identifies the correct combination much faster than a classical computer.

This unique functionality of qubits not only makes it possible to solve such problems much more efficiently but also opens up perspectives that are impossible with classical approaches.

The problem of quantum decoherence

To perform complex calculations much faster, a quantum computer requires a large number of qubits. However, as the number of qubits increases, they become more unstable, creating a phenomenon that can interrupt calculations before their completion. Qubits are extremely fragile and challenging to stabilize.

Quantum decoherence corresponds to the loss of the quantum properties of qubits, such as superposition or entanglement (where multiple qubits are linked so that the state of one instantly affects the state of another), due to their interactions with the environment. This phenomenon causes a transition from the quantum behavior of qubits to classical behavior, nullifying the specific advantages that quantum computers can offer.

Several factors can cause this decoherence:

- Thermal noise: Atomic vibrations due to heat disturb the state of qubits. These thermal fluctuations pose a major challenge, especially for quantum systems operating at ambient or slightly above absolute zero temperatures.

- Coupling with the environment: Qubits are never completely isolated from their surroundings. Unintentional interactions with particles such as atoms, photons, or other elements of the system alter their quantum states. For instance, a surrounding particle could destroy entanglement or alter a qubit’s superposition by exchanging energy or information with it. This parasitic coupling represents a leakage of quantum information known as “environmental decoherence.”

- Imprecisions in controls: Manipulating qubits relies on extremely precise techniques, such as carefully calibrated laser or microwave pulses. However, errors can occur, such as incorrect pulse durations, imprecise rotation angles, or slightly offset frequencies. These inaccuracies, often tied to instrument limitations or component variability, can disrupt qubits’ quantum states and accelerate their decoherence.

Because of these problems, a quantum computer must be well isolated from the outside world, requiring simple and extremely cold systems, far from any interference. However, this isolation creates a paradoxical situation: the more isolated the computer, the harder it becomes to communicate with it (to access the results of its calculations, or control its actions).

Quantum computer and AI: promising synergies

Artificial intelligence relies mainly on algorithms capable of learning, predicting, and analyzing data. Machine learning techniques require considerable computing power, especially when tackling complex datasets. A typical example is deep learning, where a neural network must optimize millions or even billions of parameters to learn effectively from data. This takes time, energy resources, and requires highly efficient computers.

Quantum computers can simulate complex models beyond the reach of current machines. One of the major challenges in AI systems lies in training learning models on massive datasets. Today, with models like GPT-4 or architectures such as Transformer, the time required to train a model on supercomputers can take weeks or months. Quantum computers, leveraging superposition, could train such models in parallel and simultaneously, drastically reducing learning times.

In the finance sector, for example, AI systems currently analyze complex market behaviors to predict stock trends. The addition of quantum algorithms could explore all possible predictions and significantly improve model performance by accounting for highly complex non-linear correlations invisible to a traditional computer.

Over the next few decades, the convergence of AI and quantum computing could lead to unimaginable breakthroughs across various sectors:

- Medicine and biology: Currently, the search for new therapeutic molecules relies on complex simulations conducted by supercomputers. Given the vast combinations of possible molecules, quantum computers could analyze a large number of configurations simultaneously and predict their efficacy in significantly reduced time frames.

- Security and cryptography: Thanks to algorithms like Shor’s, quantum computers can break current cryptographic systems in record time. Traditional encryption algorithms will soon become obsolete in the face of quantum computing capabilities. However, artificial intelligence combined with quantum computing could turn this threat into an opportunity. AI systems could be used to design quantum cryptographic protocols that are resistant to hacking attempts.

- Renewable energy and climate: With faster and more accurate predictive models, quantum AI could optimize global energy consumption and simulate climate change scenarios. For example, predicting the evolution of the global climate would require modeling the complex interaction of thousands of variables, such as temperature, humidity, atmospheric pressure, and human activity. Quantum computers, paired with advanced AI models, could simulate these interactions with unparalleled precision.

Furthermore, AI could serve as the conductor for quantum computers, identifying the problems best suited for quantum computation while simplifying their use through intelligent interfaces.

Quantum computing around the world

The rise of technologies has sparked a global race where companies and governments compete to achieve quantum supremacy. This fierce competition, driven by strategic, economic, and scientific stakes, continuously pushes the boundaries of innovation:

Tianyan-504 of China

In December 2024, China unveiled Tianyan-504, a quantum computer with unprecedented computing power for the country. This machine is based on a superconducting chip called Xiaohong-504, capable of managing 504 quantum bits.

While China clearly displays its ambition to rise to the top of the global hierarchy in quantum computing, Tianyan-504 is not limited to a frantic race for qubits. The project primarily aims to build a solid infrastructure for large-scale quantum systems, emphasizing the development of a comprehensive ecosystem.

IBM’s Osprey

In November 2022, IBM presented its quantum processor “Osprey,” featuring 433 qubits—three times the number of the previous generation, “Eagle.”

This significant increase in the number of qubits brings IBM closer to its goal of developing quantum systems with more than 1,000 qubits, with ambitions for systems comprising tens of thousands of qubits starting in 2026.

Quantinuum’s H2-1

Quantinuum has developed the H2-1, a 56-qubit quantum computer that outperformed Google’s Sycamore machine by a factor of 100 while consuming 30,000 times less energy.

This significant increase in the number of qubits brings IBM closer to its goal of developing quantum systems with more than 1,000 qubits, with ambitions for systems comprising tens of thousands of qubits starting in 2026.

Google’s Willow Quantum Chip

The latest breakthrough, Willow, Google’s quantum chip, boasts 105 qubits based on superconductors (double the number of its previous Sycamore chip) and is particularly notable for its advanced error correction system. Indeed, superconducting qubits are highly sensitive to interactions with their environment, and generally, increasing the number of qubits amplifies these errors.

However, Willow seems to reverse this trend. Hartmut Neven, director of Google Quantum AI, explains: “We tested networks of increasingly larger physical qubits, moving from a 3×3 encoded qubit grid to a 5×5 grid, then to a 7×7 grid. At each step, our advances in quantum error correction reduced the error rate by half.”

But Willow is not only adept at correcting errors; it is also extremely powerful. It performed a standard benchmark calculation in under five minutes—a task that would take one of today’s fastest supercomputers 10 septillion years (ten billion billion billion years) to complete!

A technological and philosophical quest

Quantum computing, by its very nature, invites us to rethink our relationship with reality, with the infinitely small, and with the complexity of the world. Where classical computing has enabled us to domesticate calculations and organize the overwhelming mass of information, quantum computing opens a window onto the unknown—a domain where the laws of classical physics give way to a universe of superpositions and uncertainties.

It is no longer just a matter of power or speed but of exploring dimensions of reality previously inaccessible, addressing problems that, by their very nature, defy our intuition and cognitive limits.

Through these fragile and sophisticated machines, we touch the boundary between the known and the unknown, between what is and what could be. This technological quest could almost resonate as a philosophical one: to understand, to predict, and perhaps even to master the mysteries of the universe.

The question remains open: how far will this exploration take us, and what will we discover about ourselves along the way?