In our previous blog, we discussed large language models (LLMs) and their utility in business. Today, we will focus on smaller language models (SLMs), which, by definition, are lighter versions of LLMs.

In the field of artificial intelligence, the size of a language model is often associated with its capacity. Large language models, such as GPT-4, currently dominate the sector, demonstrating impressive abilities in understanding and generating natural language.

However, an emerging trend shows that smaller models, long overshadowed by their larger counterparts, are gaining importance and are now proving to be powerful tools for various AI applications.

What is a Small Language Model?

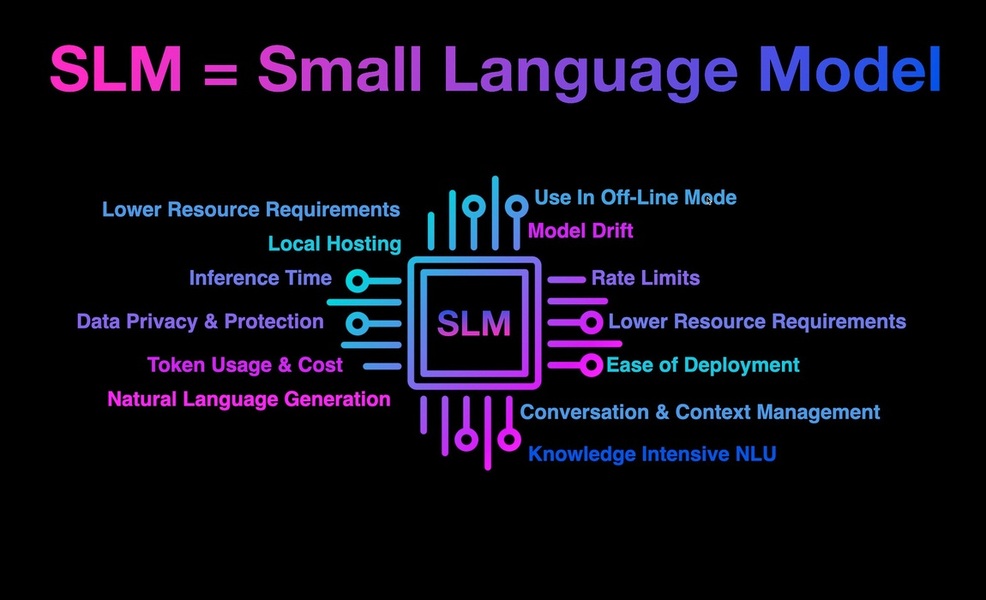

A small language model is an AI model designed to efficiently understand and generate text using fewer computational resources. Unlike a large language model, which may have millions or even billions of parameters and require enormous computational power to process large volumes of data, an SLM typically has far fewer parameters and is designed for more specialized tasks.

An example of an SLM is Mistral 7B, a model with only 7 billion parameters. Compared to ChatGPT, Mistral 7B is more modest in size but can be very effective for specific tasks such as text classification or simple response generation. Its lightweight architecture allows it to run on machines with limited resources, making it ideal for businesses with budget or technological constraints.

Advantages of Small Language Models

Due to their smaller size, SLMs are faster, less expensive to deploy, and can be optimized for applications where resources are limited, such as mobile devices or industry-specific environments.

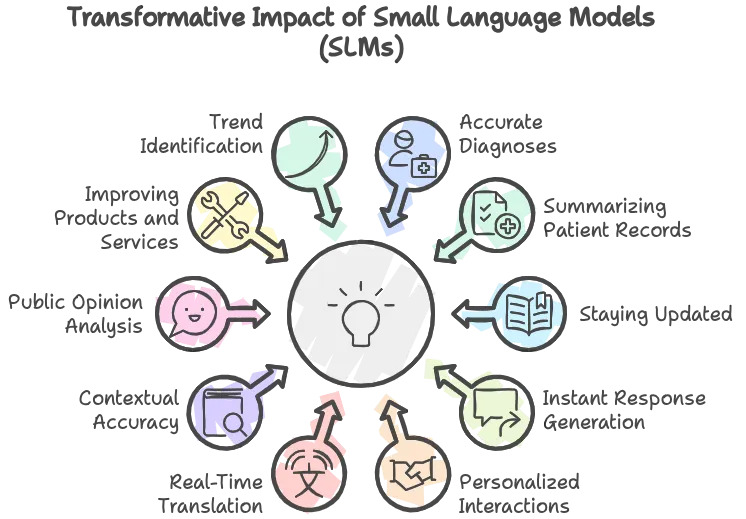

When built with small language models, generative AI applications can deliver a high level of accuracy with minimal overhead. Finely tuned AI models thus provide a game-changing solution for businesses seeking precision, efficiency, and data security in many industries:

IT Services: Customer support is key to maintaining user satisfaction and loyalty. Small language models can be used to improve the efficiency of help desks by analyzing support tickets, live chat conversations, and customer emails to identify recurring patterns and common issues.

Governments: SLMs can analyze vast datasets from various sources, such as demographic statistics, public health reports, citizen complaints, or economic trends, to extract relevant insights and facilitate informed decision-making.

Banking Sector: Small language models can analyze transactions in real time to detect suspicious and potentially fraudulent activities. They also help assess credit risks and identify unusual behavior, contributing to financial security and stability.

Healthcare: SLMs can assist in analyzing patient symptoms and suggesting possible diagnoses. They can also automate medical record management, ensuring better organization of patient data and facilitating treatment follow-up.

Commerce: Small language models can analyze various data such as sales histories, market trends, and even customer reviews to provide more accurate demand forecasts.

The Importance of SLMs in Data Protection

1. Complete control over training and model customization data

- Privacy: Organizations can ensure that sensitive or private data never leaves their infrastructure. By using an SLM, training data remains internal, reducing the risk of data leaks or privacy breaches.

- Customization: SLMs allow fine-tuned customization to meet a company’s specific needs. This includes training on internal, domain-specific data, enhancing the model’s relevance and effectiveness while ensuring the protection of sensitive information.

2. Deployment in closed networks without internet traffic

- Enhanced security: Data and models remain protected from unauthorized access, cyberattacks, and data interception. Deployment on a closed network eliminates many attack vectors that exist when data must travel through external networks.

- Data sovereignty: Companies, particularly those subject to strict regulations (such as governments or sensitive industries), can retain complete control over the location and management of their data, complying with local sovereignty and compliance requirements.

3. Open source code and transparency

- Transparency: With open-source code, organizations can inspect, audit, and modify the model’s code. This ensures that the model has no undesirable features or potential security vulnerabilities. Such transparency is crucial for building trust in the model and ensuring secure use.

- Adaptability: Organizations can adapt the source code to meet specific needs or integrate additional security measures, such as encryption protocols or enhanced access control mechanisms.

4. Resource efficiency and cost reduction

- Cost reduction: Due to their lower computational and storage requirements, SLMs can be deployed on local infrastructures without requiring massive investments in hardware or cloud services. This reduces operational costs and allows organizations to maintain local control over their data.

- Energy efficiency: SLMs consume less energy, which benefits not only cost reduction but also minimizes the company’s carbon footprint while adhering to internal data security policies.

5. Technological resilience and independence

- Autonomy: Organizations can continue to use, update, and improve their models without being subject to policy changes or service interruptions from external vendors. This independence is crucial for maintaining operational continuity, especially in sensitive or critical contexts.

- Technological sovereignty: By choosing open-source solutions and maintaining control over the infrastructure, organizations can avoid vendor lock-in, giving them greater flexibility to adapt to changing needs and evolving regulations.

The Power of Small Language Models

Recent research has shown that models with only 1 to 10 million parameters can master basic linguistic skills. For instance, an 8-million-parameter model achieved about 59% accuracy on the GLUE benchmark in 2023. These findings demonstrate that even small models can be effective in specific language processing tasks.

Performance appears to stabilize after reaching a certain scale, around 200 to 300 million parameters, indicating that further size increases yield diminishing returns. This plateau represents an ideal point for commercially deployable SLMs, balancing capacity and efficiency.

The Advantage of SLMs in Cascade Architecture

Using SLMs in a cascade architecture allows complex processes to be broken down into smaller steps. Much like a LEGO building block set, each small language model acts as a specialized brick that performs a specific task. By assembling these bricks step by step, we build a larger and more robust solution. This approach allows for problems to be addressed incrementally, with the ability to adjust or replace pieces without needing to rebuild everything.

Unlike large language models that often handle tasks in a global manner, small models enable the creation of a collaborative chain. This modular breakdown not only reduces computational load but also simplifies adjustments and improvements to various parts of the system without requiring a complete overhaul, as is often the case with LLMs.

LLM vs SLM: Which Model to Choose?

LLMs such as GPT-4 or BERT are extremely complex models, requiring considerable computing resources, powerful processors, large amounts of RAM, and extensive storage space. For instance, training a model like GPT-4 required several thousand GPUs for weeks. This represents a significant cost in terms of hardware and energy consumption.

In contrast, SLMs such as DistilBERT or Mistral 7B require far fewer resources, making them more affordable and accessible for small businesses with limited budgets. For example, DistilBERT, a lighter version of BERT, can be deployed on standard computers and even some mobile devices.

_________________________________

LLMs are designed to handle a wide range of complex tasks that require deep language understanding. They can perform sentiment analysis, generate text summaries, translate documents, or answer questions with contextual and detailed responses.

SLMs, on the other hand, are better suited to specialized tasks or applications where speed and efficiency are crucial. These models are optimized for specific contexts and can be fine-tuned to excel in particular fields.

_________________________________

LLMs are powerful generalists that excel at tasks requiring a broad and versatile understanding of natural language. They are ideal for applications needing a vast knowledge base, such as recommendation systems, creative text generation, or machine translation. For instance, DeepL, a translation service, uses LLMs to provide precise contextual translations in multiple languages.

SLMs are specialists designed for well-defined, narrower tasks. Their ability to be quickly adapted for specific tasks makes them perfect for applications like text classification or automated responses in customer support systems.

_________________________________

The cost and time required for implementation are also crucial factors when choosing between an LLM and an SLM. Training an LLM not only demands considerable hardware and energy resources but also takes significant time to reach an acceptable level of performance. Additionally, fine-tuning for specific applications can be time-consuming and costly.

SLMs, with their more modest size, are much faster to train and deploy. They require less time for fine-tuning, making them ideal for companies looking for a quick return on investment or that need ready-to-use solutions within short deadlines.

A Promising Future for SLMs

The choice between a Large Language Model and a Small Language Model depends on numerous factors that vary according to the specific needs of each business or application.

One thing is certain: the era of small language models marks a turning point in the artificial intelligence landscape. Businesses across all sectors can now leverage the precision and efficiency of models tailored to their industry to achieve significant gains in accuracy, security, and productivity.